Explanation for newbies:

-

Shell is the programming language that you use when you open a terminal on linux or mac os. Well, actually “shell” is a family of languages with many different implementations (bash, dash, ash, zsh, ksh, fish, …)

-

Writing programs in shell (called “shell scripts”) is a harrowing experience because the language is optimized for interactive use at a terminal, not writing extensive applications

-

The two lines in the meme change the shell’s behavior to be slightly less headache-inducing for the programmer:

set -euo pipefailis the short form of the following three commands:set -e: exit on the first command that fails, rather than plowing through ignoring all errorsset -u: treat references to undefined variables as errorsset -o pipefail: If a command piped into another command fails, treat that as an error

export LC_ALL=Ctells other programs to not do weird things depending on locale. For example, it forcesseqto output numbers with a period as the decimal separator, even on systems where coma is the default decimal separator (russian, dutch, etc.).

-

The title text references “posix”, which is a document that standardizes, among other things, what features a shell must have. Posix does not require a shell to implement

pipefail, so if you want your script to run on as many different platforms as possible, then you cannot use that feature.

Does this joke have a documentation page?

unironically, yes

This joke comes with more documentation than most packages.

Gotta love a meme that comes with a

manpage!People say that if you have to explain the joke then it’s not funny. Not here, here the explanation is part of the joke.

It is different in spoken form, written form (chat) and written as a post (like here).

In person, you get a reaction almost immediately. Written as a short chat, you also get a reaction. But like this is more of an accessibility thing rather than the joke not being funny. You know, like those text descriptions of an image (usually for memes).

This is much better than a man page. Like, have you seen those things?

I really recommend that if you haven’t, that you look at the Bash’s man page.

It’s just amazing.

Come to think of it, I probably have never done so. Now I’m scared.

I’m divided between saying it’s really great or that it should be a book and the man page should be something else.

Good thing man has search, bad thing a lot of people don’t know about that.

I think you mean a

manzing.I’ll see myself out.

I’m fine with my shell scripts not running on a PDP11.

set -euo pipefailis, in my opinion, an antipattern. This page does a really good job of explaining why. pipefail is occasionally useful, but should be toggled on and off as needed, not left on. IMO, people should just write shell the way they write go, handling every command that could fail individually. it’s easy if you write adiefunction like this:die () { message="$1"; shift return_code="${1:-1}" printf '%s\n' "$message" 1>&2 exit "$return_code" } # we should exit if, say, cd fails cd /tmp || die "Failed to cd /tmp while attempting to scrozzle foo $foo" # downloading something? handle the error. Don't like ternary syntax? use if if ! wget https://someheinousbullshit.com/"$foo"; then die "failed to get unscrozzled foo $foo" fiIt only takes a little bit of extra effort to handle the errors individually, and you get much more reliable shell scripts. To replace -u, just use shellcheck with your editor when writing scripts. I’d also highly recommend https://mywiki.wooledge.org/ as a resource for all things POSIX shell or Bash.

After tens of thousands of bash lines written, I have to disagree. The article seems to argue against use of -e due to unpredictable behavior; while that might be true, I’ve found having it in my scripts is more helpful than not.

Bash is clunky. -euo pipefail is not a silver bullet but it does improve the reliability of most scripts. Expecting the writer to check the result of each command is both unrealistic and creates a lot of noise.

When using this error handling pattern, most lines aren’t even for handling them, they’re just there to bubble it up to the caller. That is a distraction when reading a piece of code, and a nuisense when writing it.

For the few times that I actually want to handle the error (not just pass it up), I’ll do the “or” check. But if the script should just fail, -e will do just fine.

Yeah, while

-ehas a lot of limitations, it shouldn’t be thrown out with the bathwater. The unofficial strict mode can still de-weird bash to an extent, and I’d rather drop bash altogether when they’re insufficient, rather than try increasingly hard to work around bash’s weirdness. (I.e. I’d throw out the bathwater, baby and the family that spawned it at that point.)

I’ve been meaning to learn how to avoid using pipefail, thanks for the info!

Putting

or die “blah blah”after every line in your script seems much less elegant than op’s solutionThe issue with

set -eis that it’s hideously broken and inconsistent. Let me copy the examples from the wiki I linked.

Or, “so you think set -e is OK, huh?”

Exercise 1: why doesn’t this example print anything?

#!/usr/bin/env bash set -e i=0 let i++ echo "i is $i"Exercise 2: why does this one sometimes appear to work? In which versions of bash does it work, and in which versions does it fail?

#!/usr/bin/env bash set -e i=0 ((i++)) echo "i is $i"Exercise 3: why aren’t these two scripts identical?

#!/usr/bin/env bash set -e test -d nosuchdir && echo no dir echo survived#!/usr/bin/env bash set -e f() { test -d nosuchdir && echo no dir; } f echo survivedExercise 4: why aren’t these two scripts identical?

set -e f() { test -d nosuchdir && echo no dir; } f echo survivedset -e f() { if test -d nosuchdir; then echo no dir; fi; } f echo survivedExercise 5: under what conditions will this fail?

set -e read -r foo < configfile

And now, back to your regularly scheduled comment reply.

set -ewould absolutely be more elegant if it worked in a way that was easy to understand. I would be shouting its praises from my rooftop if it could make Bash into less of a pile of flaming plop. Unfortunately ,set -eis, by necessity, a labyrinthian mess of fucked up hacks.Let me leave you with a allegory about

set -ecopied directly from that same wiki page. It’s too long for me to post it in this comment, so I’ll respond to myself.

Yup, and

set -ecan be used as a try/catch in a pinch (but your way is cleaner)I was tempted for years to use it as an occasional try/catch, but learning Go made me realize that exceptions are amazing and I miss them, but that it is possible (but occasionally hideously tedious) to write software without them. Like, I feel like anyone who has written Go competently (i.e. they handle every returned

erron an individual or aggregated basis) should be able to write relatively error-handled shell. There are still the billion other footguns built directly into bash that will destroy hopes and dreams, but handling errors isn’t too bad if you just have a littlediefunction and the determination to use it.“There are still the billion other footguns built directly into bash that will destroy hopes and dreams, but”

That’s well put. I might put that at the start of all of my future comments about

bashin the future.

Shell is great, but if you’re using it as a programming language then you’re going to have a bad time. It’s great for scripting, but if you find yourself actually programming in it then save yourself the headache and use an actual language!

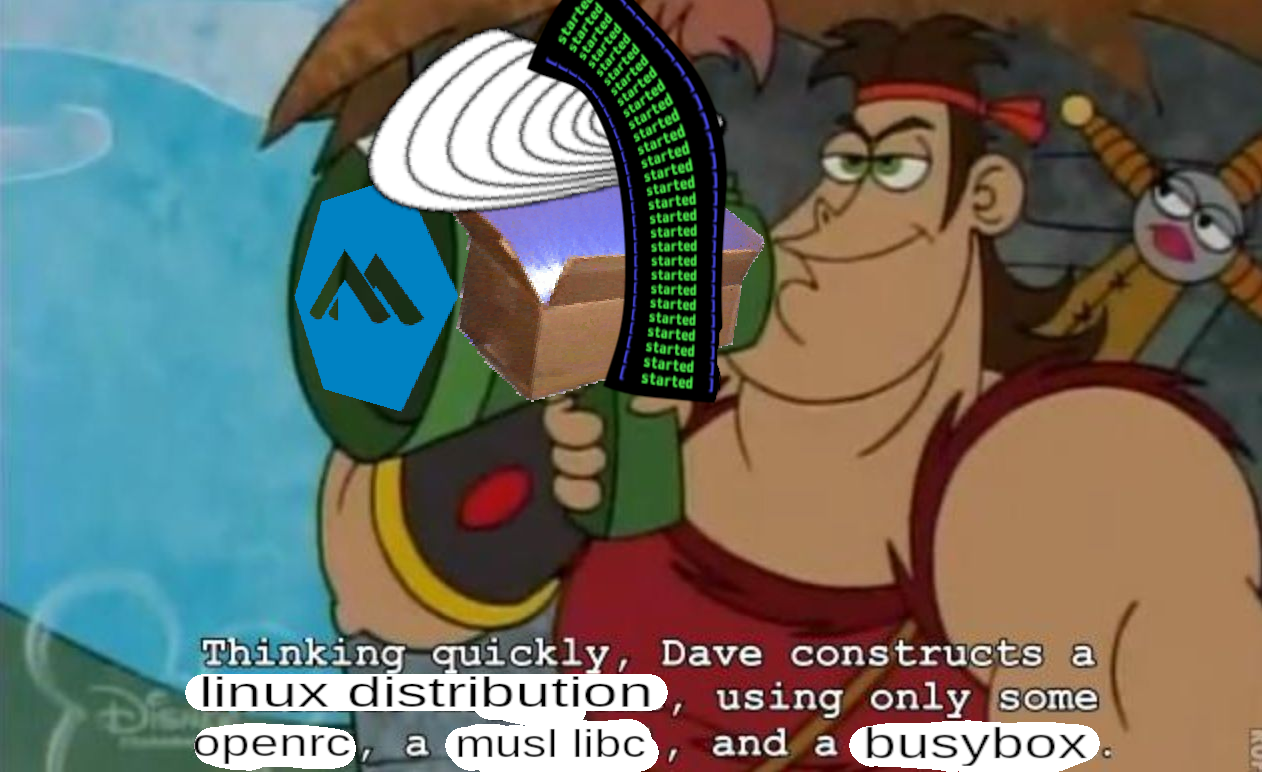

Alpine linux, one of the most popular distros to use inside docker containers (and arguably good for desktop, servers, and embedded) is held together by shell scripts, and it’s doing just fine. The installer, helper commands, and init scripts are all written for busybox sh. But I guess that falls under “scripting” by your definition.

Aka busybox in disguise 🥸

So what IS the difference between scripting and programming in your view?

No clear line, but to me a script is tying together other programs that you run, those programs themselves are the programs. I guess it’s a matter of how complex the logic is too.

just use python instead.

- wrap around

subprocess.run(), to call to system utils - use

pathlib.Pathfor file paths and reading/writing to files - use

shutil.which()to resolve utilities from yourPathenv var

Here’s an example of some python i use to launch vscode (and terminals, but that requires

dbus)from pathlib import Path from shutil import which from subprocess import run def _run(cmds: list[str], cwd=None): p = run(cmds, cwd=cwd) # raises an error if return code is non-zero p.check_returncode() return p VSCODE = which('code') SUDO = which('sudo') DOCKER = which('docker') proj_dir = Path('/path/to/repo') docker_compose = proj_dir / 'docker/' windows = [ proj_dir / 'code', proj_dir / 'more_code', proj_dir / 'even_more_code/subfolder', ] for w in windows: _run([VSCODE, w]) _run([SUDO, DOCKER, 'compose', 'up', '-d'], cwd=docker_compose)Cool, now pipe something into something else

- wrap around

Powershell is the future

- Windows and office365 admins

I even use powershell as my main scripting language on my Mac now. I’ve come around.

That’s sounds terrible honestly

I’m so used to using powershell to handle collections and pipelines that I find I want it for small scripts on Mac. For instance, I was using ffmpeg to alter a collection of files on my Mac recently. I found it super simple to use Powershell to handle the logic. I could have used other tools, but I didn’t find anything about it terrible.

#!/usr/bin/python

No, sorry. I’m a python dev and I love python, but there’s no way I’m using it for scripting. Trying to use python as a shell language just has you passing data across

Popencalls with a sea of.decodeand.encode. You’re doing the same stuff you would be doing in shell, but with a less concise syntax. Literally all of python’s benefits (classes, types, lists) are negated because all of the tools you’re using when writing scripts are processing raw text anyway. Not to mention the version incompatibility thing. You use an f-string in a spicy way once, and suddenly your “script” is incompatible with half of all python installations out there, which is made worse by the fact that almost every distro has a very narrow selection of python versions available on their package manager. With shell you have the least common denominator of posix sh. With Python, some distros rush ahead to the latest release, while other hang on to ancient versions. Evenprint("hello world")isn’t guaranteed to work, since some LTS ubuntu versions still havepythonpointing to python2.The quickest cure for thinking that Python “solves” the problems of shell is to first learn good practices of shell, and then trying to port an existing shell script to python. That’ll change your opinion quickly enough.

Lua

I’m glad powershell is cross-platform nowadays. It’s a bit saner.

Better would be to leave the 1970s and never interact with a terminal again…

Quick, how do I do

for i in $(find . -iname '*.pdf' -mtime -30); do convert -density 300 ${i} ${i}.jpeg; donein a GUI, again?$time = (get-date).adddays(-30) gci -file -filter *.pdf ` | ? { $_.lastwritetime -gt $time } ` | % { convert -density 300 $_.fullname $($_.fullname + ".jpg") }🤷

I fail to see the GUI in that.

Like, if I open Dolphin, Thunar, or

winfile.I missed where the person you replied to said to never use the terminal again, now your comment makes perfect sense. I thought you were conflating the preference for powershell with windows and therefor more GUI.

My bad, and I completely agree with you, if I had to give up CLI or GUI it would be GUI hands down no competition at all, I would die without CLI.

Better would be to leave the 1970s and never interact with a terminal again…

I’m still waiting for someone to come up with a better alternative. And once someone does come up with something better, it will be another few decades of waiting for it to catch on. Terminal emulation is dumb and weird, but there’s just no better solution that’s also compatible with existing software. Just look at any IDE as an example: visual studio, code blocks, whatever. Thousands of hours put into making all those fancy buttons menus and GUIs, and still the only feature that is worth using is the built-in terminal emulator which you can use to run a real text editor like vim or emacs.

I’m a former (long long ago) Linux admin and a current heavy (but not really deep) powershell user.

The .net-ification of *nix just seems bonkers to me.

Does it really work that well?

The .net-ification of *nix just seems bonkers to me.

It IS bonkers. As a case study, compare the process of setting up a self-hosted runner in gitlab vs github.

Gitlab does everything The Linux Way. You spin up a slim docker container based on Alpine, pass it the API key, and you’re done. Nice and simple.

Github, being owned by Microsoft, does everything The Microsoft way. The documentation mentions nothing of containers, you’re just meant to run the runner natively, essentially giving Microsoft and anyone else in the repo a reverse shell into your machine. Lovely. Microsoft then somehow managed to make their runner software (reminder: whose entire job consists of pulling a git repo, parsing a yaml file, and running some shell commands) depend on fucking dotnet and a bunch of other bullshit. You’re meant to install it using a shitty

setup.shscript that does that stupid thing with detecting what distro you’re on and calling the native package manager to install dependencies. Which of course doesn’t work for shit for anyone who’s not on debain or fedora because those are the only distro’s they’ve bothered with. So you’re either stuck setting up dotnet on your system, or trying to manually banish this unholy abomination into a docker container, which is gonna end up twice the size of gitlab’s pre-made Alpine container thanks to needing glibc to run dotnet (and also full gnu coreutils for some fucking reason)Bloat is just microsoft’s way of doing things. They see unused resources, and they want to use them. Keep microsoft out of linux.

What type of .net-ification occurs on *nix? I am current linux “admin” and there is close to 0 times where I’ve seen powershell not on windows. Maybe in some microsoft specific hell-scape it is more common, but it’s hard to imagine that there are people that can accept a “shell” that takes 5-10 seconds to start. There are apps written in c# but they aren’t all that common?