I am an engineer that has worked in the space industry my entire career, and here are my thoughts:

GOES and METEOR weather satellites transmit images publicly that are NOT real time, but are downlinked, processed, and uplinked for public broadcast. This is pretty simple and saves a lot of processing power on the spacecraft side. That’s important because the biggest constraints on spacecraft processing are: power budget, radiation hardiness, and thermal.

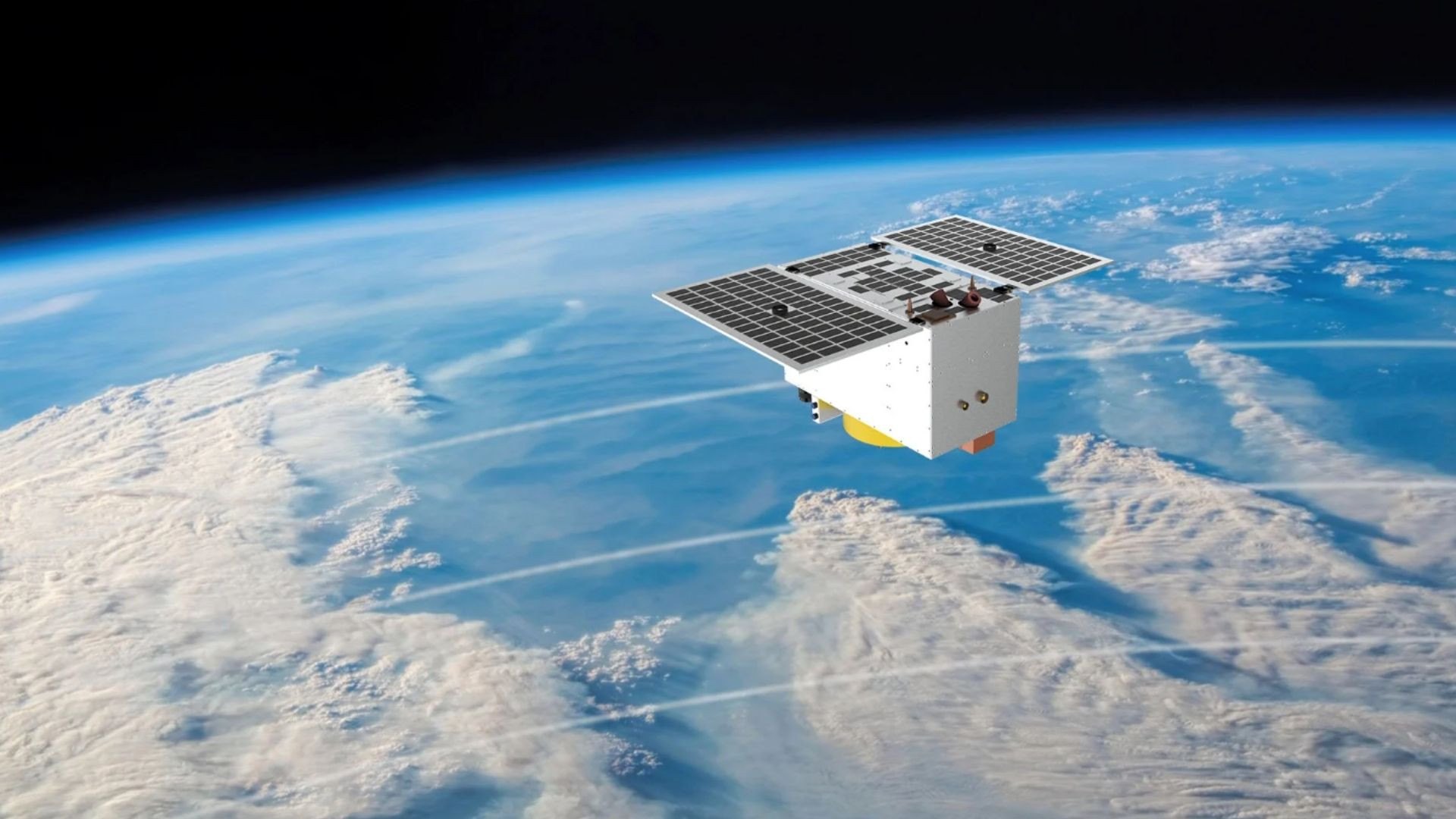

I was able to find an image of the actual satellite in assembly. From this we can guess that there is probably not more than a square meter of solar on-board, so we can give it a round 1300W of power. I couldn’t find any orbital parameters(If Gunter doesn’t have it, who does?), but given it’s main task is as an imager, we can assume LEO, and so this 1300W isn’t going to be constant since the spacecraft will most likely be eclipsed part of the time.

Generous 1000W average solar flux, generous 25% panel efficiency, 250W/h.

So lets look at rad hard processors. They have to be either shielded or run multiple and do voting, though even that isn’t fully acceptable as some SEU (single event upset) can cause permanent damage and leave you down a voting member. The latest and greatest RAD5545 advertises 5.6 giga-operations per second (GOPS) at 20 watts, so if we assume (artlessly, and likely incorrectly) a linear power usage, the 80 TOPS of the WJ-1A should need some 280kW. So we know they aren’t using a typical rad-hard CPU topology for their AI models. I see that Corel/Google advertise 2 TOPS per watt on their edge TPUs (Tensor Processing Unit).

So assume a large ASIC (application specific integrated circuit) at the same efficiency of 2TOPS/W, with 4x multiples for voting and we get a far more reasonable 160W. Still a LOT of power on orbit for such a small spacecraft, but actually possible.

So for thermal limits, do they run the TPU only on the dark side in place of their on-board heater? The have some white panels that might be radiators, but it’s hard to say.

Hard to say from these fluff articles. I really want to hear:

- What’s the efficiency on the TPU?

- How did they make it rad-hard, and how long do they expect it to last?

- What models do they run on the edge?

- What is their downlink budget? Can they pull full imagery if they want it or are they limited to ML analysis only?

I expect to see more ML in space, but to be honest I did not expect it to be in such a small form factor.

Sounds like a gimmick.

its chinese ofcourse it is

I call shenanigans. A fully autonomous space vehicle is three miracles away - we need a revolution in avionics to get systems capable of running computationally-expensive models, a revolution in sensor technology to allow for dense state knowledge of satellite systems without blowing mass and volume budgets, and we need a revolution in AI/ML that makes onboard collision avoidance and system upkeep viable.

I do believe that someone has pre-trained a model on vegetation and terrain features, has put that model up on a cube sat, and is using it to “autonomously” identify features of interest. I do believe someone has duct-taped a LLM to the ground systems to allow for voice interaction. I do not agree that those features indicate a high level of autonomy on the spacecraft.

Hmm, I’m not sure what to think about that.

that’s cool tech and has the potential great discoveries. But on the other hand, this just seems like sky net…

The next thing we know, a t1000 is going to show up!

I must have missed the part where it has the capability to launch missiles.

I though this was the banner of a new Minecraft modpack