Sounds like there’s a story behind this, where could I read more?

Sounds like there’s a story behind this, where could I read more?

Yes but if they do find a poor shmuck that wants the job, they can hope he’ll undervalue himself and ask for even less.

yeah, traceroute might hint at that, if this is what is going on.

I will perhaps be nitpicking, but… not exactly, not always. People get their shit hacked all the time due to poor practices. And then those hacked things can send emails and texts and other spam all they want, and it’ll not be forged headers, so you still need spam filtering.

left-pad as a service.

It’s probably AI-supported slop.

(Not to be confused with our premium product, ParticleServices, which just shoot neutrinos around one by one.)

No, it’s just that it doesn’t know if it’s right or wrong.

How “AI” learns is they go through a text - say blog post - and turn it all into numbers. E.g. word “blog” is 5383825526283. Word “post” is 5611004646463. Over huge amount of texts, a pattern is emerging that the second number is almost always following the first number. Basically statistics. And it does that for all the words and word combinations it found - immense amount of text are needed to find all those patterns. (Fun fact: That’s why companies like e.g. OpenAI, which makes ChatGPT need hundreds of millions of dollars to “train the model” - they need enough computer power, storage, memory to read the whole damn internet.)

So now how do the LLMs “understand”? They don’t, it’s just a bunch of numbers and statistics of which word (turned into that number, or “token” to be more precise) follows which other word.

So now. Why do they hallucinate?

How they get your question, how they work, is they turn over all your words in the prompt to numbers again. And then go find in their huge databases, which words are likely to follow your words.

They add in a tiny bit of randomness, they sometimes replace a “closer” match with a synonym or a less likely match, so they even seen real.

They add “weights” so that they would rather pick one phrase over another, or e.g. give some topics very very small likelihoods - think pornography or something. “Tweaking the model”.

But there’s no knowledge as such, mostly it is statistics and dice rolling.

So the hallucination is not “wrong”, it’s just statisticaly likely that the words would follow based on your words.

Did that help?

You never review code when you have no time to do an actual review? Looks good to me :)

Is that pronounced as gokoze?

Not that simple. You have several moving parts just in your frontend. But all of your frontend is still accessible. E.g. if you run ng build, the output javascript will contain links to your module:

/ src/app/app.routes.ts

var routes = [

{

path: "no-match",

canMatch: [noMatchGuard],

loadChildren: () => import("./chunk-2W7YI353.js").then((m) => m.NoMatchComponent)

},

{

path: "no-activate",

canActivate: [noActivateGuard],

loadChildren: () => import("./chunk-JICQNUJU.js").then((m) => m.NoActivateComponent)

}

];

So whoever wanted to see what’s in those separate files and just load the code in those components directly.

And of course, you have the backend completely separately anyway. Those two lazy-loaded modules - whether protected by guards or not - will contain links to your /count. If they’re called or not is not relevant, whoever is interested can read the code and find the URLs. Someone can just call your /count without even looking at your code.

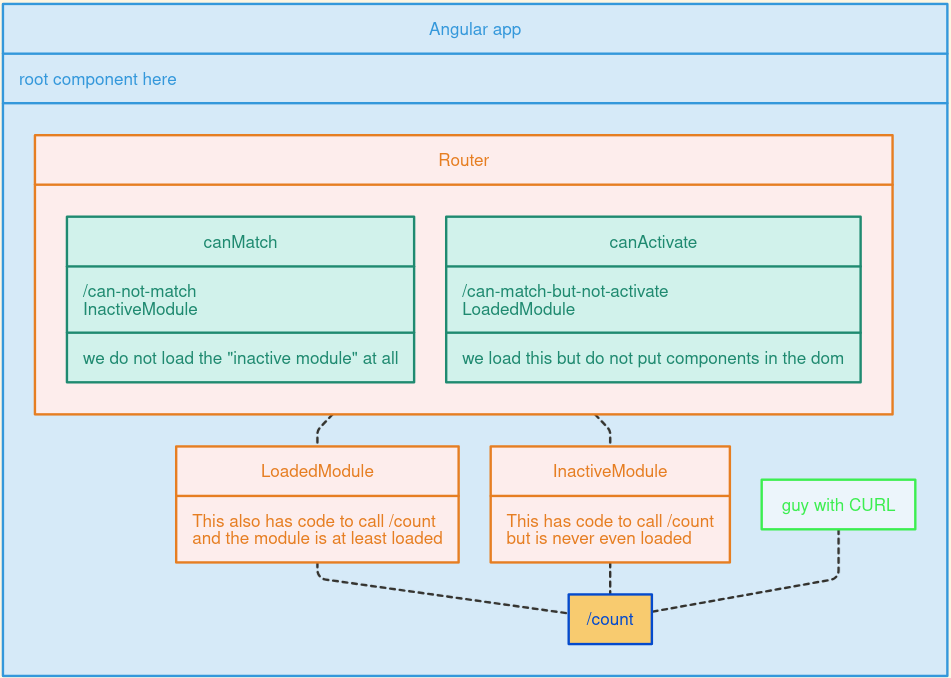

See if this lil image of the moving parts helps:

shaking my (trademark) head?

Hey all, I just spotted the question by accident. I’ll try to answer it in case you’re still wondering, or for some future concern.

The difference is that canMatch is being evaluated first, runs before you even look into the URL. Imagine that canMatch guard is only allowing admins in.

That means you can e.g. prevent even attempting to load the routes (e.g. lazily) if you know your current user is an admin. They try to open /site-settings or /users page or similar - and you just nope them back.

CanActivate, in contrast, will first go load the remote route, then try to match the user.

Now, you also asked why. Well, the difference is usually tiny, but it might make sense. Let’s say you have some data loading, some actions being performed, some background sync operations running when you load a lazy route /admin. Example, you have /admin page, but when you load it, canActivate router needs to go back to server and ask if this particular admin can administer this particular tenant. If you use canActivate, some of these are running always. And if you know your user is not an admin at all, you don’t even try to load the module, and you save that time.

Tiny bit of difference, but it can help sometime.

So, send’em a dicpic and you’re in :)

Actual programmer

I wonder if JJ anonymous branches would be something that solves this. I’ve only read about it, have not used JJ yet.

Well, the API angle is similar to Space Traders