And it failed spectacularly.

We only needed a simple form, but we wanted to be fancy, so we used “nextcloud forms”.

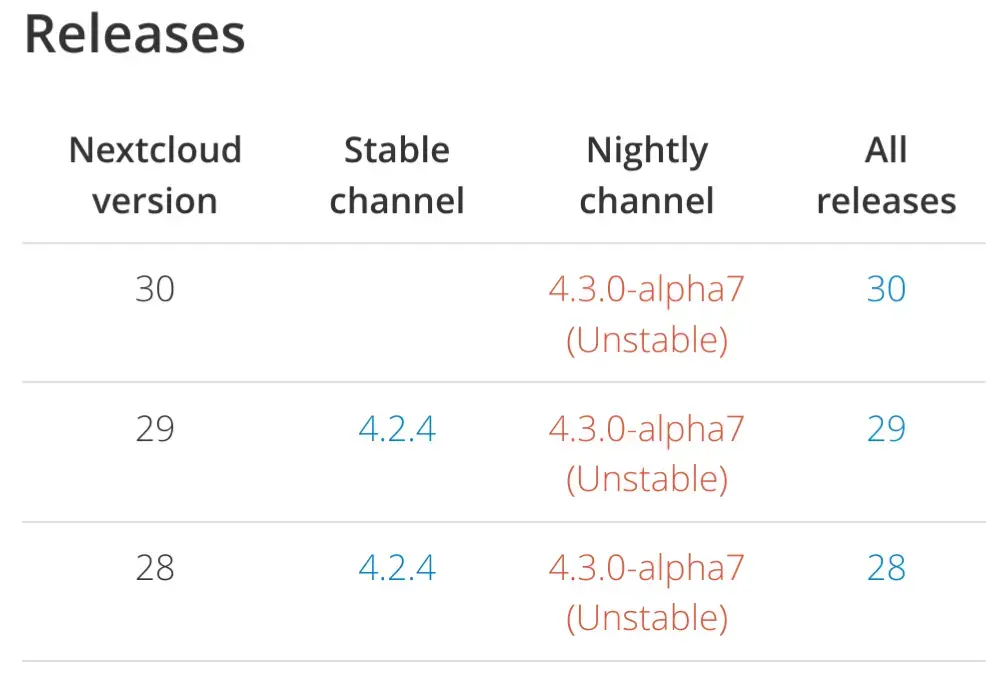

The docker image automatically updated the install to nextcloud 30, but the forms app requires nextcloud 29 or lower. No warning whatsoever. It’s an official app, couldn’t they wait that it was ready for NC 30 before launching it? The newsletter boasts “NC hub 9 is the best thing after sliced bread” yet i don’t see any difference both in visual or performance compared to NC hub 2

Conclusion: we made our business to rely on nextcloud forms as a signup form, but the only reason we were using it was disabled who knows how many weeks ago.

Wait, you update productions systems without running a staging environment? Or even checking the update notes and your installed apps? Also no backups? What kind of business are you running over there?

Oh, Nextcloud docker is a joke. They follow no standards or best practices when it comes to docker. They keep the entire app directory mounted as a volume, which means it does upgrade you without you “needing” to upgrade the docker image. They have volumes within volumes they need to mount. Their configs can (and do) override environment variables. Most actions that need to be taken require running an

occcommand which can only be done by exec’ing into the container.Nextcloud docker is honestly just such a joke. They should have rethought their application from a docker sense and they didn’t. God just number one - Docker images should never update. It’s a freaking pinned version for a reason. If I want to update, it should be as simple as upping the version tag, and it does any upgrades in place when I do that.

I honestly steer people away from Nextcloud now because of how mismanaged their images are.

Yep, and I’d guess there’s probably a huge component of “it must be as easy as possible” because the primary target is selfhosters that don’t really even want to learn how to set up Docker containers properly.

The AIO Docker image is an abomination. The other ones are slightly more sane but they still fundamentally mix code and data in the same folder so it’s not trivial to just replace the app.

In Docker, the auto updater should be completely neutered, it’s the wrong way to update the app.

The packages in the Arch repo are legit saner than the Docker version.

I had to learn how to mount subpaths for their terrible container, and god just the updater is mind boggling. And I have to store their code in a volume, because of course I have to, why would code and configuration ever need to be… configurable? I actually just tried to put their

config.phpinto a ConfigMap just to try, and of course PHP doesn’t allow that - not that I blame PHP for it - but ffs it’s been years, it’s time to allow config to also come from a yaml or something.OwnCloud rewrite in Go is way better

I’m attracted to it because of the posix backend. Did anyone try it? Is it stable?

For reference, https://owncloud.dev/architecture/posixfs-storage-driver/

I’m testing it now. Seems way faster and more stable.

I’m just trying to get the oauth login to work but the actual file sync works great.

Is this compatible with existing (Android) clients? I need offline file support for KeePass.

Yes it works with the android app

Yeah I’ve thought about migrating, but I have a few users on it who use nextcloud regularly now, so I’m forced to support it - unless there’s an easy migration path

Having the web server be able to overwrite its own app code is such a good feature for security. Very safe. Only need a path traversal exploit to backdoor

config.php!

What’s the better way of hosting it?

I do it in docker at home, for myself, in an environment I am okay with accidentally destroying - and even then I have nightly backups of the volumes.

In a professional system, as mentioned in my other comment, I would simply just do it in a VM with the disk scheduled also for nightly backups. Nextcloud just hardcoded too many things dependent on thinking the underlying system was mutable. Unfortuantely that’s just the easiest way to handle it.

However, also as mentioned, if I were in a professional environment, I’d have to really look at the cost for all of that infrastructure and my time to run it - and decide if I really thought I could run it myself with all of that overhead, and that it would still make sense compared to just doing google docs or something. Remember it’d be my ass on the line, as OP is learning

I wiped a whole drive (luckily it was filled with a redundant backup) with the docker image, as the behavior was (or still is, don’t know if it was fixed) to

rm -rf .and replace with fresh stuff ifoccisn’t found. So in the docker compose I accidentally mistyped the wrong volume as /mnt/disk2 instead of /mnt/disk3 and it erased itOh yeah, if you’re in a professional environment, I’m sorry but that’s just not great. The only way I’d consider running Nextcloud professionally would be on a VM of it’s own with nightly disk backups, with blob storage as the backing - and even then with the cloud costs really how close are you to just paying for an enterprise license to Google or Microsoft? Plus the headache of not having to worry about it yourself

The images work fine for me. The problem is that Nextcloud is a complex app that doesn’t really work with the design of one container to do one job. It is pretty much a regular application that uses docker for packaging.

That doesn’t make up for bad container decisions. I run much more complex containers both that split out responsibilities and that contain everything as one container. The size and complexity is irrelevant to the bad design decisions. You can have an image that eats up gigabytes of space that runs off of proper environment/config variables with properly mounted volumes.

Again there docker image is just a packaging format and a health check. I very much wish it were better but for now it works

Just because it works doesn’t mean it follows best practices.

https://docs.docker.com/build/building/best-practices/#create-ephemeral-containers

Yes no staging because it’s something used at most by 2 concurrent users, we were ok with 95% reliability (we discovered it was disabled after at least two weeks lol)

Otherwise we would just have signed up to one of the many cloud forms sites at $100/year

Backups daily but it’s unthinkable to revert something like nextcloud to a months old one

Subscribed to both newsletter and RSS feed to know about issues (the command to update the docker images isn’t automated but manually issued). The maintainer of the forms app is nextcloud itself so any incompatibility should have been written in red bold characters in the blog posts and newsletter.

Why are your backups so out of date? Just setup daily snapshots and call it a day if it isn’t critical. You never want to update major versions first thing. Wait 3 months and then update.

This smells like shadow IT

I have daily backups and hourly zfs snapshot. The problem is that, because nobody used the useless survey plugin, I have no idea when it broke. It could have been yesterday or it could have been 4 months ago

One that lacks a good IT department apparently

To be fair a certain security company was in global news for exactly that same send it behavior. Why waste precious resources on multiple instances? Investors hate waste. 😅

The world is your

oystertest envIt worked on my box!

If I understand correctly, nextcloud automatically updated … which I didn’t think it would, normally. Maybe it’s a “feature” of the AIO docker image?

Backups and rollbacks should be your next endeavor.

Seems easier to blame Nextcloud

I have daily Borg backups held for at least one year but the problem is that the issue came out at least two weeks ago and nobody noticed. It’s better to have nothing (customer gets error page when viewing useless survey that nobody is watching) rather to restore such a old backup (everyone loses 2-4 weeks of data)

Docker automatically upgrades if you tell it to by specifying “latest” or not specifying a version number. But it only upgrades if you issue the pull command or the compose up command. There are ways to start without a pull like using start or restart. So yes, there was warning and something you did actively told it to upgrade.

And it’s really bad practice to update any software without testing, especially between breaking/major version numbers.

Finally, it’s not uncommon for a platform to release its update and then the plugins or addons to follow. Especially with major updates that require lots of testing before release. This allows plugin/add-on makers to fully test their software with the release version of the platform rather than all of the plugin makers having to wait for one that may be lagging behind.

You again commented on the docker upgrade comment that I said it’s irrelevant. It’s really like saying “if you wore pants that day, they wouldn’t have done that, it’s your fault”. It doesn’t make sense to spend $1000 in operating costs to host a useless survey that gets 3 responses a year. If it breaks for a week nobody dies.

Focus on how they’re moving fast and breaking things, ok? It’s not normal that official plugins don’t support the latest stable release. It’s not an alpha, it’s not a beta, it’s stable. Stable means everything needs to work. Official plugins need to support the latest stable release. It’s acceptable only if this was a third party plugin made by a hobbyist in their free time

WordPress updates also break many plugins but it never happened that a stable release blocked official ones like woocommerce.

By the way, now I have learned that the latest version of nextcloud is a public beta and it’s better to always stay one version behind. So why don’t they call it public beta?

Why did you do automatic updates without testing? That is the real issue.

Honestly your IT department sounds like it could use some help

Manual docker upgrade issued my me after reading the official blog and newsletter. The upgrade notes described the new version as the best thing ever and didn’t mention that one of their selling points would be disabled without any notice.

I’m starting to see a pattern in those comments like “why did you wear a skirt that night? It looks like you asked for it…”

Whoah, dude.

Not only are you being told what could have and will ward off unplanned breakage, but you have somehow characterised yourself as an unsuspecting victim here? Inaccurate and really inappropriate comparison.

You knew enough to take on deploying a service, now comes the grown-up part where you hedge against broken updates.

I don’t know if maybe it’s my bad english in explaining it or it’s your comprehension skills that lack something.

I write it again for the 10th time: I’m 100% ok having 1-2 weeks of downtime, and this is why i do it live. It simply doesn’t make sense to dedicate several hours every month on testing if all i need is getting 3 useless surveys filled per year. If it was essential for work and i needed 99.99999% uptime i would directly subscribe typeform or surveymonkey. If tomorrow my install completely bricks and disappears in thin air, i would have lost 30 minutes of time and no valuable data. I literally spent more time designing the logo for the instance than managing it. This is just to state how unimportant the data stored on it.

This post wasn’t made about “oh no i lost millions and all my irreplaceable data thanks to nextcloud stupid updates” but how stupid is to release something that breaks features that they’re using as selling point.

ok, now that we established that my IT skills are lacking and i should be fired because one single survey couldn’t be filled, this is the release notes: https://docs.nextcloud.com/server/latest/admin_manual/release_notes/upgrade_to_30.html

Please tell me where they say that this feature is automatically disabled and also tell me why you think that this is acceptable.

I don’t understand why you think that is acceptable.

I even can’t find other examples where a release is so rushed, that selling points are disabled without ETA. I never saw for Libreoffice 24 dropping support for opendocument files for a couple months just because they had to meet a self imposed deadline

I’m starting to see a pattern in those comments like “why did you wear a skirt that night? It looks like you asked for it…”

Cute victim mentality, but gross and insanely wrong comparison

Learn from your mistake and don’t update without testing next time, it’s 100% on whoever updates the production environment to make sure that shit isn’t broken for whatever reason before pushing it customer-side

It’s more like you bought a random white powder from your dealer without asking what it was and are now upset you almost died

ok, please tell me where in the release notes they say that the forms app will be automatically disabled without warning after update, thanks https://docs.nextcloud.com/server/latest/admin_manual/release_notes/upgrade_to_30.html

Literally just googled “nextcloud forms” and looked at their supported versions and whaddya know, it says right on that webpage that there’s no stable version for 30 yet, so safe bet would be that it wouldn’t properly work when upgrading:

There is a supported nightly build, though, so you could probably have tried that

It’s on you to look up what will break when you update, or to test and see what happens when you do. A major update page isn’t going to list all of the things that rely on it that break because that’s fucking unreasonable

go to watch who is the maintainer of nextcloud forms, then see if they could have known that NC 30 was about to go out or not

It’s definitely not unreasonable that if I make product X and I make product Y, and they’re not compatible, then a bit of warning is suggested.

Again, wordpress updates break plugins all the time, but automattic plugins (same people of wordpress) never break. Coincidence? They just launch a new wordpress without checking if woocommerce or jetpack don’t work?

then a bit of warning is suggested

Which was given by the app that gets broken by the update

Windows doesn’t tell you that upgrading to 11 will break x, y, and z that you have installed, you’re expected to go to the sites for those programs and check if they work. Same exact idea

The same company making both apps is never a guarantee that they’ll play nice day 1, for many reasons

I’ll repeat: learn from your mistake instead of blaming other people for your naivete. If an app is important and might break during an update of something: check the apps documentation to see if it supports said update

Ok i get it, it’s best practice to do rushed releases without QA because users are the free testers.

They definitely had no way to know that their own app was incompatible, this is definitely a problem of the stupid user. Idiot user who believed their newsletter “update now, hub 9 is the best thing ever”. The user should have known that stable = untested beta

Also, this issue happened exclusively to me in the whole world, because everyone else isn’t an idiot like me and checks 30+ release notes scattered in 30 different repositories to guess any incompatibility. I was lazy and only checked the main notes! Such an idiot! Why I didn’t check every single installed app? It’s just 30! Nextcloud devs couldn’t have known that nextcloud devs didn’t update the manifest of the forms app! I should have checked before! Completely my fault!

Now if you excuse me I got an update to the Windows nextcloud desktop app and it must reboot after update because reasons even if there’s a GitHub issue with 200 angry comments about that. No wait! Stupid me! First I have to fire a VM and use a whole week to write automated tests that account for every possible combination of settings, language, power management, installed apps and so on. Otherwise I could lose a worthless survey that nobody reads and that will definitely get me fired!

Docker is kind of a giant mess in my experience. The trick to it is creating backup plans to recover your data when it fails. As such, I don’t really recommend it to anyone at all.

I wouldn’t recommend Docker for a production environment either, but there are plenty of container-based solutions that use OCI compatible images just fine and they are very widely used in production. Having said that, plenty of people run docker images in a homelab setting and they work fine. I don’t like running rootful containers under a system daemon, but calling it a giant mess doesn’t seem fair in my experience.

Honestly it is fine assuming you don’t need 24/7 uptime. Just make a compose file and verify you have a working health check

Sounds like you are not using docker correctly.

There was a recent related discussion on Hacker News and the top comment discusses why this sort of solution is not likely to be the best fit for smaller organizations. In short, doing it well requires time and effort from someone technically sophisticated, who must do more than the bare minimum for good results, as you just learned.

Even then, it’s likely to be less reliable than solutions hosted by big corporations and when there’s a problem, it’s your problem. I don’t want to discourage you, but understand what you’re committing to and make sure you have adequate buy-in in your organization.

That reminds me of work. I’m old, young me has been through the mistakes and the pain of wanting to control and self-host everything.

Now I manage a team of young idealists who have not yet been burned sufficiently hard by reality and I feel like I spend half of my time denying them permission to add new self-hosted services to our stack.

Just last month a young padawan was pissed at the spent on an external auth service and had been pushing hard for a self hosted OSS solution which he was convinced he could handle by himself (which was most likely true, from a purely technical standpoint).

Since he wouldn’t let it go, I “punished” him by having him spend one day in excel and powerpoint to prepare a cost benefit analysis to present to the architecture review board, including server cost, backups, redundancy, security, monitoring, pen-testing, auditing, his time and all the bells and whistles we needed to be compliant with all the ISO-x we have to be. (we’re in a banking related field).

Our estimated internal cost ended up about 6x the one of the SASS solutions and still wasn’t as reliable.

Most people don’t understand the amount of effort it requires to run a secure & reliable system and if I had a dollar for everytime I heard it’s as simple as “docker run”, I could retire early.

Never upgrade to the latest and greatest of … anything really, especially in production. Let others test it first, or as suggested already, have a staging environment where you test the upgrade first. I guess you can still downgrade nextcloud though, especially if you have a backup.

Are you using the AIO image? I don’t know how well that works, but yeah, I absolutely hate automatic updates like that. I tried it once and I decided to use the plain “official but not supported” docker image instead, where I manage things myself. Never had an issue, and I can control which version I’m running, I can backup to wherever I want, using whichever system I want, etc.

AIO has a updater but it is manual by default You need to enable automatic updates yourself, which… Is done through a bash script you need to add yourself into the system crontab

And not only that, the instructions do say things could break and even suggests setting up backups for such

No offence, but is Docker really the best way of running NC in a professional environment? Also, if you don’t want Docker to upgrade to latest image, don’t use the “latest” tag in your configuration.

Docker is probably the simplest way to get a working deployment, since there’s a lot of moving pieces in a Nextcloud install.

Though, it’s not going to automatically update itself unless you’ve made a poor choice for a production environment configuration, which sounds like what happened here.

(Even using a latest tag isn’t really a problem until/unless you re-pull the image to do the upgrade. And/or have configured something to automatically update your shit, but again, don’t do that in production.)

Nextcloud is also annoying in that updating the base won’t pull all the apps to a current version, so you have to know what’s going to break before you update the base so you can then update the apps as needed. Which, again, can’t just be left up to automatic updates.

Exactly. I don’t know if the AIO image was used and how that all works (I stay away from that and the snap which is just an abomination) but no one should try to selfhost anything for prod unless they know exactly how it works. That and have a staging env. If you’re not up to the task then just pay for some commercial hosting (even if it’s just Nextcloud that is hosted elsewhere.)

I’ve run the nextcloud image (just docker.io/nextcloud IIRC) pinned for years with k8s and it’s durable and fine. It stays put and I just take the time to update my testing instance, make sure it all works with some cheap smoke tests, then upgrade prod.

Yes, docker is the best way. Anything else is hell. It is still painful with docker but at least it is manageable

You can still choose to installt he old version in NC30 and it will do so. and I upgraded to NC30 and my forms app continues to be functional. you can still give it a try.

The forms app is useless. It’s basically for surveys. I can’t see how you’d use it for signups.

I wrote signups but I meant survey (in another comment I wrote “I would have never checked the useless survey”)

I pretty much use NextCloud as just a storage device and nothing else. Using anything in the actual UI is just atrocious and the apps are not updated or just outright abandoned, and can’t be relied on.

I disagree. I use and depend on the apps including things like calendar and talk.

YOU CAN’T DO THAT

YOU CAN’T DO THAT

Us too, we only use it as a filelink provider for thunderbird and to host a useless survey that’s going to get filled once a quarter. That’s why nobody noticed the survey was disabled and that’s why we’re not doing multistage testing in multiple virtual machines. We are a super small company and ok with something that one day can be 3 days offline. Otherwise it would be cheaper to pay $100 to Surveymonkey and $100 to Dropbox

take a VM snapshot, upgrade the app, validate it still works as intended, if not, revert from snapshot

Not to flame you, but really just an HTML form was all you needed? It’s a super simple feature…

sure, but why solve problems in 10 minutes when i can do it way more sophisticated using 10x more time and resources?

(at the moment reverted to the easy html form + php send mail)